[video] Evolving Images for Visual Neurons Using a Deep Generative Network Reveals Coding Principles and Neuronal Preferences

Date Posted:

May 13, 2019

Date Recorded:

May 1, 2019

CBMM Speaker(s):

Gabriel Kreiman ,

Margaret S. Livingstone ,

Will Xiao ,

Carlos Ponce ,

Peter Schade All Captioned Videos Publication Releases VIDEO

Description:

Five authors of the above paper, published in the journal "Cell", describe their methods and findings on exciting neurons using generative neural networks in real time.

GABRIEL KREIMAN: And so I've always been obsessed with this notion of the methodologies that we use to investigate what neurons like, meaning what kind of visual stimuli trigger neuron firing.

MARGARET LIVINGSTONE: Now, normally what everybody in the field does is just show them thousands of natural images, and find out that they like to respond to particular kinds of images, like faces or hands or bodies. But that's always a hypothesis, and you can't possibly test all natural images.

GABRIEL KREIMAN: So I've been posing this question to students in a class that I teach at Harvard.

WILL XIAO: I was new to the field, and I was basically really excited about the idea of brains, and neuronetworks, and generative adversarial neural networks. Those are all very hot topics, and really cool ideas to me. So I wanted to find a way to put them all together.

GABRIEL KREIMAN: And Will, who's one of the first authors, was one of the students in this class, and he took on these questions a challenge and doing his homework. He came up with the idea of trying to combine generative algorithms with genetic algorithms, to try to let the neuron dictate what it likes.

CARLOS PONCE: One day I received an email from Gabriel Kreiman telling me that he, and a rotating student in his lab, Will Xiao, had developed a way to discover internal representations hidden in some artificial neurons of compositional neural networks. And they could demonstrate that through a process that involved a genetic algorithm in combination with a generative adversarial network, they could evolve pictures that gave rise to activity in the cell that superseded those of natural images. Including the ones that had been used to train the very same evolutional neural networks. And they asked me whether it was possible to translate this into primate electrophysiology.

MARGARET LIVINGSTONE: So I have a lab that records from infratemporal cortex in macaque monkeys, and this student who is doing a rotation project, Will Xiao, came up with a program that would allow us to use neural networks to explore a huge image space to try to figure out what are the optimum images for infratemporal cortex neurons.

PETER SCHADE: We did these experiments with chronically implanted arrays in rhesus macaques, and these arrays allow us to study the same population of neurons across days.

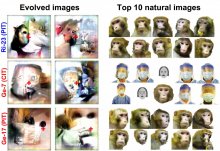

WILL XIAO: So we basically put a neuron online together in a closed loop with a generative neural network, and we put a genetic algorithm on top of this. So that the responses of the neurons can be used to guide the evolution of images that's made by the generative network, so that these images become progressively better for the neuron.

MARGARET LIVINGSTONE: So he, and Carlos, and Peter together, figured out how to integrate Will's program with our physiology rigs. Which was non-trivial, because you have to have three computers talking to each other constantly. And analyzing data. And generating images.

CARLOS PONCE: I remember lots of shouting because we had to be in different rooms. And I remember for the first few days not getting many interesting results, until finally, one day, we seem to hit it and we saw that many of the cells that we were recording from began to exhibit increases in firing rate, while at the same time the images began to take form.

PETER SCHADE: Over an hour or two we would watch Will's algorithm, and a generative network turn these black and white textures into these colorful scenes.

MARGARET LIVINGSTONE: It worked so well, the neuron we were recording from evolved something that looked just like a monkey face. Except it was distorted in a way that was really interesting. Everybody in the lab went crazy. And then a few days later, another cell evolved something that looked just like one of the care staff wearing the blue PPE that we all wear when we go in the monkey rooms.

WILL XIAO: And all of this happening in a part of the brain that people used to think cared about much lower level information.

GABRIEL KREIMAN: So when we saw this stimuli emerge, it was eye opening. It was essentially opening the doors to completely new world of images that's distinct from anything that anyone had ever tried so far.

CARLOS PONCE: That was one of the most exciting moments in my scientific career.

GABRIEL KREIMAN: So this is the first time that we have a sense of neuronic preferences dictated by the neuron itself. And this was like creating something out of nothing at all.

WILL XIAO: In this research, we brought together tools and approaches from both computer science, and visual neuroscience research. And we developed a tool that's really useful for neuroscience research, but can also be informative for the study of artificial neural networks as well.

CARLOS PONCE: I am incredibly proud of this paper, and I really have to give credit to the team. This was one of those efforts that for which everybody was essential. And everybody contributed something very important to it. I hope to continue this type of collaboration in the future with the CBMM community. It's an amazing community.

Associated Research Module:

have an interactive transcript feature enabled, which appears below the video when playing. Viewers can search for keywords in the video or click on any word in the transcript to jump to that point in the video. When searching, a dark bar with white vertical lines appears below the video frame. Each white line is an occurrence of the searched term and can be clicked on to jump to that spot in the video.

have an interactive transcript feature enabled, which appears below the video when playing. Viewers can search for keywords in the video or click on any word in the transcript to jump to that point in the video. When searching, a dark bar with white vertical lines appears below the video frame. Each white line is an occurrence of the searched term and can be clicked on to jump to that spot in the video.